Do you need Datapump?

At nearly every customer I’m visiting there is a need to take export dumps of the entire database every single day. But why?

You probably know the answer: because the application team requested it to reset a schema or a table to an earlier time in the event due to a user failure, batch error or whatever.

My next question is this: “How often did you reset a table or a schema within the last 12 months?”

Answer: “Not a single time… but we had to do it some years ago with one of our databases. So its still important.”

Let me recap:

You take terabytes of dumpfiles every day, which not only consumes space, but will degrade performance and probably shrink the maintenance window for batches etc. only for a protection which did not happen at all for all of your databases within the last 12 months? And keep in mind: datapump export is not a backup! So you hopefully have a valid RMAN backup as well. Which again consumes space, will degrade performance and shrinks the maintenance window for batches. Isn’t there a better way to fulfill this “need”?

We will first look at the reasons why it might be necessary to reset a table or schema. Even though it sounds strange, but I have seen some production databases where developers had full access or more where the application run with DBA privilege. Not only is this a security and compliance issue but an easy way to destroy data and therefore to reset tables or schemas.

So here is my first recommendation: Do not allow development on a production database. If a senior developer or analyst needs access, it should be limited to a read only access. If data needs to be changed via a tool like Toad, grant the minimum privilege (e.g. update) to that owner but only for a very limited time frame.

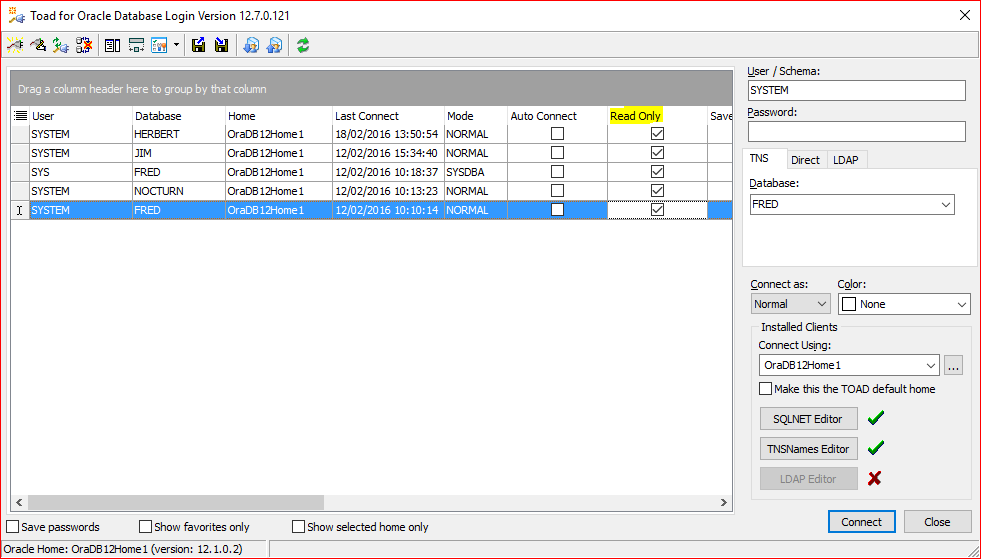

But what about the DBA himself? Yes, this could be a problem as well. My personal solution: I’m using Toad for all necessary operations with read-only database access.

This little checkbox is very useful as it doesn’t allow an INSERT, UPDATE, DELETE regardless if you are using schema browser or any other graphical tool, but it also works within the editor.

So if I need to make a change I have to explicitly log out, change the box, and login again. This might sound cumbersome, but if you have hundreds of databases to manage you will appreciate it.

But still there is a chance for a failure, where you need to rollback an application. If this application is the only one in the database, you can simply flashback the databases to a former point in time. To use this feature, you need to set up the database in flashback mode.

Flashback Database

Flashback database only works with archive log mode enabled – that should be a no brainer for production databases. Unfortunately, you need to shut down the database to enable flashback and you need to calculate the space for the flashback logs.

SQL> SHUTDOWN IMMEDIATE SQL> STARTUP MOUNT SQL> ALTER DATABASE FLASHBACK ON; SQL> ALTER DATABASE OPEN; SQL> SELECT flashback_on, log_mode FROM v$database; FLASHBACK_ON LOG_MODE ------------------ ------------ YES ARCHIVELOG

The main question is: how far back you need to roll your database at max? Is it one hour or one day. Every time frame older than one day is often useless as you lose too many transactions. But even three days might be worth thinking of because this might have been a weekend. Let’s assume you have a batch job, which runs on Friday evening and on Monday people complain about the data. With the three days’ window and the assumption that there have not been that many transactions in the meantime you can flashback the database within few minutes to resolve the issue. This implies that you have sufficient space to hold three days of archived redo logs plus three days of flashback logs – probably not a small amount of data, but much faster as if you had to import your schema after this kind of error.

But what if you have multiple schemas in one database? Other application owners might not be happy if you roll back their daily work just because one application failed.

If you have a standby database with flashback database enabled as well you can simply flashback the standby database to the point in time you need and export/import the data you need.

Assuming that you are using Oracle Dataguard it’s a four step approach:

- Stop the Dataguard apply process

- Create a guaranteed restore point to later resync the standby database

- Now you can flashback the standby database and open it read-write or read-only.

- Now you are able to query the database or to export the data. After you’ve finished your research you can close the database roll it forward to the guaranteed restore point and resync it with your production database.

DGMGRL> EDIT DATABASE 'HERBERT_S2' SET STATE='APPLY-OFF'; DGMGRL> DISABLE CONFIGURATION;

SQL> CREATE RESTORE POINT before_open_standby GUARANTEE FLASHBACK DATABASE;

SQL> FLASHBACK DATABASE TO TIMESTAMP "to_date('YYYY-MM-DD HH24:MI:SS’,’2016-02-29 10:00:00')";

SQL> ALTER DATABASE OPEN;

SQL> SHUTDOWN IMMEDIATE SQL> STARTUP MOUNT SQL> FLASHBACK DATABASE TO RESTORE POINT before_open_standby; SQL> ALTER DATABASE CONVERT TO PHYSICAL STANDBY; DGMGRL> ENABLE CONFIGURATION DGMGRL> EDIT DATABASE 'HERBERT_S2' SET STATE='APPLY-ON';

This will minimize the impact of a rollback of transactions to a minimum while eliminating the need for daily exports though saving space and shrinking the time frame for maintenance.

In one of my next blogs I will extend this functionality to the new multitenant database architecture.

No comments on “Datapump or Flashback Database”