In the Oracle 12 New Features Guide you will find this sentence:

“The maximum size of the VARCHAR2, NVARCHAR2, and RAW data types has been increased from 4,000 to 32,767 bytes“

Increasing the allotted size for these data types allows users to store more information in character data types before switching to large objects (LOBs). This is especially useful for brief textual data types and the capabilities to build indexes on these types of columns.” (

Oracle® Database New Features Guide 12c Release 1 (12.1) E17906-16 Page 1-13

What great news! Especially if you want to upgrade your database to Unicode character set (AL32UTF8) and might have reached the “old” limit of VARCHAR2 which was 4000 byte (and not characters!).

Sample Table

The sample database was created with AL32UTF8 character set.

In the first example I’m trying to create a table with a VARCHAR2(32000 CHAR) field:

CREATE TABLE extendedtable (

id NUMBER GENERATED BY DEFAULT AS IDENTITY PRIMARY KEY,

textfield VARCHAR(32000 CHAR),

length NUMBER,

lengthb NUMBER,

lengthc NUMBER)

TABLESPACE users;

Textfield VARCHAR2(32000 CHAR), ERROR at line 3: ORA-00910: specified length too long for its datatype

So it looks like it’s not that easy to use the extended data types. But I’m even more interested what happens if you create a table with a “normal” size and add some special characters to it.

So the second version doesn’t exceed the standard specification we had in earlier versions:

Now I’m going to insert some strings into the textfield and calculate the length as default (length), in byte (lengthb) and in characters (lengthb). With the routine I’m using the first textfield has 500 characters and that doubles until it hits the max string size.

The first string is without any special characters but only A-Z, a-z and 1-0.

The screenshot shows the expected result: the maximum field length is 4000 (byte or character?).

To verify

Running the same script, which doubles the field with every new insert I run into the following error message:

INSERT INTO standardtable (textfield, length, lengthb, lengthc) * ERROR at line 1: ORA-14089: result of string concatenation is too long

And the table content shows why:

The table now has 7 rows and the last row gives some impression on the limitations:

The binary length (lengthb) is 2008 byte while for both length and lengthc the value is 2000. So the 8th row would have exceeded the 4000 byte limitation again!

So what about the message “The maximum size of the VARCHAR2, NVARCHAR2, and RAW data types has been increased from 4,000 to 32,767 bytes.”?

Extended Data Type Usage

There is a new Oracle parameter named MAX_STRING_SIZE which default is “STANDARD”. But don’t try to update that string to “EXTENDED” immediate! The database has to be upgraded to use the extended data types and the procedure is irreversible so there’s no way back.

Upgrade NON-CDB

For a Non-CDB Database the entire database has to be upgraded to support the extended data types with the following procedure:

SQL> SHUTDOWN IMMEDIATE SQL> STARTUP UPGRADE SQL> ALTER SYSTEM SET max_string_size=extended; SQL> @?/rdbms/admin/utl32k SQL> SHUTDOWN IMMEDIATE SQL> STARTUP

First the database has to be brought into the “upgrade” mode. Only in this mode it’s possible to modify the parameter max_string_size in memory (so without specifying scope=spfile). Now the data dictionary must be upgraded to support the 32k limits for VARCHAR2, NVARCHAR2 and RAW with the SQL-Script utl32k.sql. That script will take less than one minute to finish and after that the database is ready to use the extended data types.

Upgrade PDB

With the multitenant database each individual PDB can have its own setting for max_string_size. The parameter is marked as “ISPDB_MODIFIABLE”. To report the settings for all containers it’s necessary to use v$system_parameter or gv$system_parameter because v$parameter or “show parameter” only lists the parameter of the CDB.

This picture shows that the current value of max_string_size is “STANDARD” for all containers (CON_ID = 0).

The following example shows how to upgrade the PDB “pdbnormal” to use extended data types:

SQL> ALTER SESSION SET CONTAINER = pdbnormal; SQL> ALTER PLUGGABLE DATABASE CLOSE IMMEDIATE; SQL> ALTER PLUGGABLE DATABASE OPEN UPGRADE; SQL> ALTER SYSTEM SET max_string_size=extended; SQL> @?/rdbms/admin/utl32k SQL> ALTER PLUGGABLE DATABASE CLOSE; SQL> ALTER PLUGGABLE DATABASE OPEN;

As a result the PDB is using its own value formax_string_size:

After the upgrade I run the tests again:

First the “normal” table with the VARCHAR2(4000 CHAR) and the challenge to add 4000 characters including the “€” symbol:

It works! As you can see the byte length exceeds the 4000 bytes (actually 4016 bytes) for the 8th row. But as textfield was created with VARCHAR2(4000 CHAR) it’s not possible to insert a larger string.

Second the table with the huge VARCHAR2 size (32000):

It works as well and now we can store up to 32000 characters (as long as those do not exceed 32767 byte) in our “textfield”.

So why is it neccessary to upgrade the database?

The answer can be found on page 2-28 in the “Oracle® Database SQL Language Reference 12c Release 1 (12.1) E17209-15”:

“A VARCHAR or NVARCHAR2 data type with a declared size of greater than 4000 bytes, or a RAW data type with a declared size of greater than 2000 bytes, is an extended data type.”

Extended data type columns are stored out-of-line, leveraging Oracle’s LOB technology. The LOB storage is always aligned with the table. In tablespaces managed with Automatic Segment Space Management (ASSM), extended data type columns are stored as SecureFiles LOBs. Otherwise, they are stored as BasicFiles LOBs. The use of LOBs as a storage mechanism is internal only. Therefore, you cannot manipulate these LOBs using the DBMS_LOB package.”

Oracle® Database SQL Language Reference 12c Release 1 (12.1) E17209-15

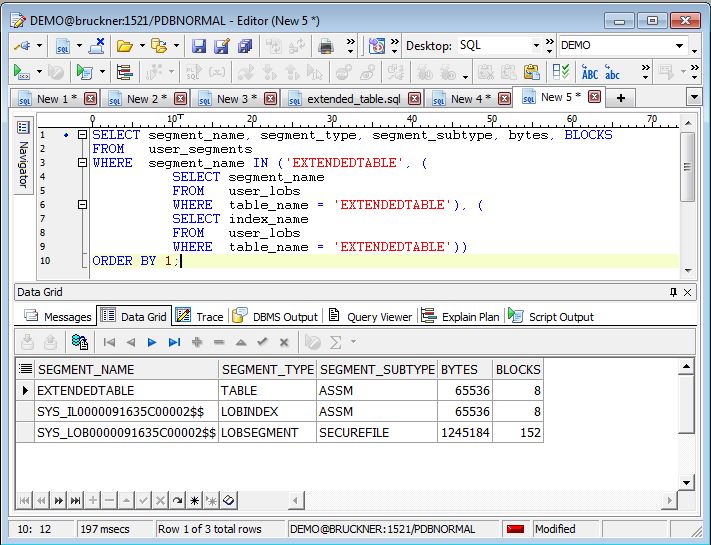

Unfortunately if you don’t know this limitation you might end in some trouble because of the table layout and the calculation of the size of the table. If you analyze the table extendedtable the result is the following:

As you see the number of blocks is 5 therefore the total size is 5 x 8192 = 40k – with an average space of 6550 per block! Comparing that with the actual values in the table:

SQL> SELECT sum(lengthb(textfield))

FROM extendedtable;

SUM(LENGTHB(TEXTFIELD))

-----------------------

71254

So the real size of the table is about 72kB.

The following query gives a better impression on the size of the table:

So the total size of the table is about 1,5 MB!

Conclusion

As long as a data field doesn’t exceed the 4000 byte for a VARCHAR2 you won’t see any differences to the “standard” functionality and expectation. But with the lift of the limitation for the string size you might hit another limitation: The total size of an indexed column is about 75% of the database block size. If you are using VARCHAR2 size far above 4000 you might not be able to create an index on the field and you should probably look for a tablespace with 32k blocksize to minimize the risk.

In the past we had a problem when migrating from an one byte character set (e.g. WE8ISO8859P15 or WE8MSWIN1252) to unicode with the data type CLOB. The size of the CLOB column will double! This behaviour has not been changed with Oracle 12c:

“Data in CLOB columns is stored in the AL16UTF16 character set when the database character set is multibyte, such as UTF8 or AL32UTF8. This means that the storage space required for an English document doubles when the data is converted.”

Oracle® Database Globalization Support Guide 12c Release 1 (12.1) E17750-11 Page 6 – 13

As a consequence does this imply that all VARCHAR2 fields which exceeds the 4000 byte will now need double the space? Luckily not. The internal conversion from VARCHAR2 to CLOB does not change the character set so the values ar still stored in AL32UTF8 – otherwise our example with the extendedtable wouldn’t have been successful.

So if you have to choose between max_string_size=extended or converting to CLOBs I would suggest that the new Oracle 12c features is the better way to follow. But if you are already using CLOBs you might want to stay with them as long as you have sufficient space in your database.

Thank you very much